“New directions in science are launched by new tools much more often than by new concepts.”

Freeman Dyson

A cloud, an umbrella, a question

This morning, my phone says there is an eighty-percent chance of rain at four. I slip an umbrella into my bag before I leave. Nothing heroic here – just a small recognition of the fact that the future, sometimes, with a bit of uncertainty, can be known a few hours in advance.

An hour later I’m in a meeting about next quarter’s churn. We flip through last year’s surveys, a handful of interviews, plans for a focus group. For storms we trust satellites and supercomputers, for human behavior we rely on the same tools we used a hundred years ago. No wonder these predictions often miss.

Both the weather and human society are complex, dynamic systems with countless interacting variables. For centuries, both domains seemed impossible to accurately predict. Weather was often deemed an act of nature or divine whim; human behavior was seen as too free or capricious to forecast at all. Early techniques in both fields were rudimentary. However, while meteorology has seen remarkable progress, our understanding of human behavior has stalled.

Why did clouds surrender their secrets while crowds did not?

The short answer is tools. Meteorology advanced through a century of better sensors and faster chips. Social science never experienced the same kind of progress. But that may be starting to change. Language models could offer a breakthrough for human forecasting, much like early computers once did for weather.

The remarkable progress of meteorology

London, August 1861. Vice-Admiral Robert FitzRoy sits in the new Meteorological Department, studying telegraph reports from coastal stations. He sketches a rough pressure map, then writes a line for tomorrow’s Times:

“Weather for the 1st and 2nd: fine in the north and west; fresh winds in the south.”

Robert FitzRoy

It is the world’s first public weather forecast. The Times prints it, then ridicules it: “Science is not wizardry.” Parliament dismisses it as fortune-telling. Cartoonists call FitzRoy the “Storm-King.” Two days later, a storm hits the English Channel. The sailors who had read the notice took cover early and survived.

FitzRoy’s insight was not based on a new concept. Meteorology already knew that falling pressure signals a storm. What changed were the tools: barometers, telegraph wires, a national grid of observers. FitzRoy took his life four years later. Exhausted, ridiculed, deep in debt. He died convinced he had failed, unaware that his forecasts were already saving lives.

Somme, 1916. Outside Lewis Fry Richardson’s mobile ambulance, World War I rages. In quiet moments between patients, the mathematician tries to predict tomorrow’s weather by hand. He divides Europe into grid boxes, plugs numbers into equations, and after six weeks of calculation, produces a six-hour forecast. Too slow to be useful, but decades ahead in principle.

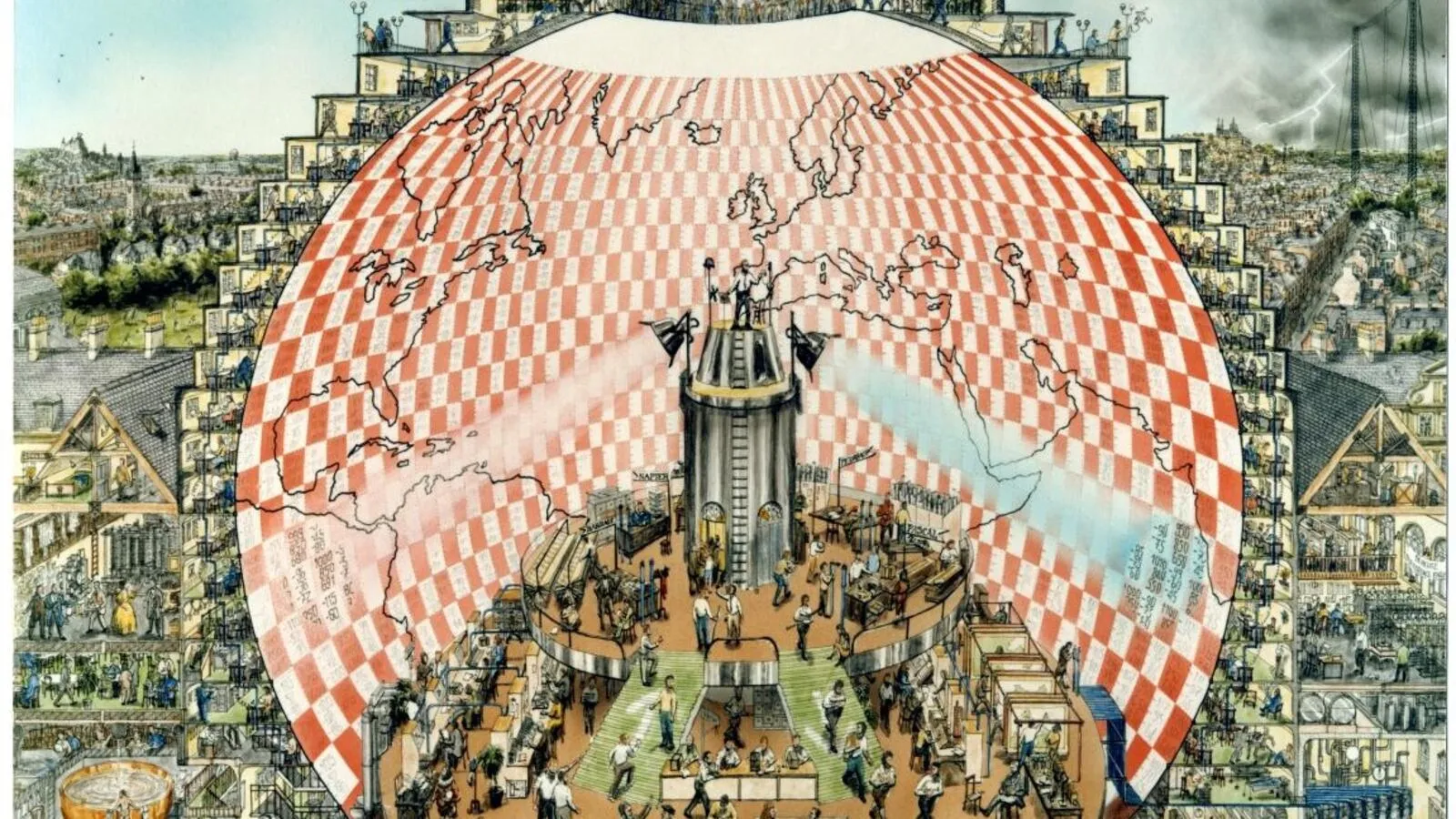

Richardson imagines a “Forecasting factory”, in which forecasts are produced by 64,000 mathematicians each calculating weather equations for a small portion of the sky. He dies before silicon arrives, but his fantasy anticipates the architecture of modern supercomputers.

Lewis Fry Richardson’s Weather Forecasting Factory, in which 64,000 human mathematicians fill a theater-like hall, each calculating weather equations for a portion of the world map, all orchestrated to procude a single forecast.

Princeton, March 1950. Jule Charney, Agnar Fjørtoft, and John von Neumann run ENIAC, the first programmable electronic computer. The 30-ton machine of wires and vacuum tubes is housed in a converted ballroom. It consumes enough electricity to black out a village. The work is slow and fragile. Punch-cards are swapped between calculations, and one single error can crash everything.

The team feed the ENIAC a simplified atmospheric model. The forecast covers a slice of North America at a 736-km grid, useless even for local storms. ENIAC runs for twenty-four hours and spits out a twenty-four-hour forecast. It barely keeps up with real time, but the frontier has been crossed. Richardson’s factory is no longer fantasy. It’s just under-powered.

Cape Canaveral, April 1960. A 270-pound satellite named TIROS-1 spins into orbit and sends back blurry strips of cloud cover. In seventy days, it produces more than 19,000 images. For the first time, meteorologists can see typhoons curling over oceans where no ship sails.

Then everything accelerates. Within two years, continuous global coverage is routine. Grid-cells shrink from 736 to 1.5 km, satellites begin sending a fresh picture of the hemisphere every thirty minutes, and today’s models process over forty million observations a day, a more than 1000x increase from the ENIAC era.

The progression of modern meteorology is astounding: one-day temperature forecasts are at 96-98 percent accuracy, four-day outlooks now equal the precision of a one-day forecast from the 1990s, hurricane-track errors have fallen by 75 percent. The economic and human dividends from lives saved, power loads balanced, crops spared, are incalculable.

Crowds without radar

The early social scientists dreamt as boldly as the meteorologists. In Paris, around the time Fitzroy was publishing his first forecasts, Auguste Comte imagined a new discipline he called social physics, a science where sociologists could predict humanity’s future as precisely as astronomers plot a comet’s course.

But social science didn’t have the instruments that propelled meteorology forward. Its core methods – surveys, polls, interviews, focus groups – are valuable for studying human behavior, but they are slow, low-resolution, and difficult to scale. And they have barely evolved in decades.

While weather forecasts improved dramatically, predictions in social science remain inconsistent and unreliable.

-

- Literary Digest mails two-million postcards and confidently calls the U.S. election for Alf Franklin Roosevelt wins in a historic landslide. The poll is off by 38 percentage points.

- 1984-2004. Psychologist Philip Tetlock tracks 82,000 expert predictions. On average, their accuracy is only slightly better than chance.

-

- The replication crisis hits psychology. One hundred well-known studies are rerun. Only 36 percent produce the same results. Similar efforts in other social sciences produce equally concerning results.

- 2015-2025. Polls miss Brexit. They miss Trump. Behavioral scientists struggle to model behavior during COVID-19. Economists wrongly forecast recessions from higher interest rates.

The list goes on. Each failure sparked debate, but no structural breakthrough. Social science had no barometer, no satellite feed, no six-hour update cycle. Just another round of analysis, and often another miss.

Why weather forecasting improved

Meteorology advanced to real-time rain alerts because the tools kept improving. Thanks to Moore’s law, each generation of faster chips enabled four reinforcing forces that closed the gap between what the sky is doing and what it will do next:

1. High-resolution data

It started with a handful of coastal barometers. Today, a global network of satellites captures the planet every few minutes, radar scans storms in 3D, and jetliners live-stream athmospheric data.

2. Stronger models

Early equations were simple, more like toy models than true representations of the atmosphere. But as computing power grew, meteorologists transformed them into full-scale simulations: tiny digital Earths that can model tomorrow’s weather before tomorrow arrives.

3. Faster feedback

Forecasts age fast. Modern centres like the UK Met Office rerun their global models four times a day.

They pull in fresh data, simulate the future, update their predictions. Missed predictions are caught and corrected in near real time.

4. Public accountability

Forecasters have skin in the game. Every prediction is time-stamped, archived, and graded in public. Mistakes are visible, but so is progress. The result is a system built on accountability, with constant pressure to improve.

Meteorology built a system that observes continuously, updates frequently, and improves in public. The result is a steady rise in accuracy, longer forecast time horizons, and growing trust in its predictions.

Language models – the missing instrument for human behavior

Social science has lacked tools that scale with compute, capture fine-grained dynamics, and improve through feedback. We believe language models may offer a breakthrough. While still early, and imperfect, they point to a new generation of tools that can do more than generate text. They offer a way to simulate and study human behavior at scale.

Language models are AI systems trained on the internet, books, articles, and more. They aim to learn the statistical patterns of language, but in the process, also encode a broad spectrum of knowledge of human behavior, norms, and culture. This makes them not just tools for text generation, but instruments for a broader understanding of human behavior.

Language models do not “think”. But they can emulate how people might speak, and by extension, how they might behave in different scenarios. This gives them potential as behavioral simulators. Instead of surveying a few hundred individuals, asking them about hard-to-imagine hypotheticals, we can now query behavioral patterns embedded in trillions of words.

Researchers are already testing language models’ ability to emulate human behavior in surveys, behavioral games, and decision-making tasks. Others are studying emergent group behavior by simulating interactions among many agents, each with distinct goals, memory, and social dynamics. These early effort function much like weather simulations, but for individual and group behavior instead of atmospheric systems.

What’s exciting is that this is tool-driven progress. Language models are not schools of thought in social science. They are a new kind of instrument, one that scales with Moore’s law. They open the door to high-frequency behavioral data and more adaptive, dynamic models. The same forces that transformed meteorology.

Of course, there are real and well-documented limitations. Language models hallucinate, inherit bias from training data, and often fail when pushed too far from the distributions they were trained on. They are not reliable across all contexts, and much about their inner workings remains opaque.

But the lesson from meteorology is to use imperfect models carefully, observe where they fail, and improve them iteratively. FitzRoy’s early forecasts were often wrong. But they were published, tested, and refined. Progress came from the loop between observation, simulation, and revision.

As we build these new behavioral simulations, we may uncover patterns we didn’t know to look for. As Freeman Dyson said: “The tool-driven revolution finds new things that need explaining.” The next big ideas in sociology or economics might very well come from large scale simulations of sillicon humans.

Forecasts carry consequences

Robert FitzRoy’s suicide was partly driven by ridicule, but also the awareness that forecasts have consequences. Each storm warning he wired to the coast might keep a crew in harbour, or, if he were wrong, cost them their livelihood.

Lewis Fry Richardson walked away from government weather work when he realized his equations could aid bombing raids. Later he tried to model the outbreaks of wars, hoping that if conflict could be forecast it might also be prevented.

Both men understood that forecasts are never just numerical outputs from a mathematical model. A forecast steers behavior. They have important moral implications.

That responsibility matters even more when predicting human behavior rather than storm patterns. Any prediction can nudge a person’s choice before a single word of policy is written. Forecasting crowds should always seek to increase human agency. As with a weather forecast, to give people a clear map so they can choose their paths.

Where the umbrella points

We believe language models could become a powerful new instrument for social science. It’s early, but we’re starting to see signs of real progress.

Social science has struggled not from a lack of ambition, but from a lack of tools. In meteorology, progress came from better instruments, allowing them to take advantage of denser data, stronger models, faster feedback loops, and public accountability. With each cycle, forecasts became sharper, more grounded, and more useful.

But meteorology also teaches humility. A one-hour forecast is highly reliable. One day out, it’s still strong. But for four days, accuracy fades dramatically. Beyond two weeks, the system becomes chaotic. Social science will face similar limits. Domains where complexity overwhelms predictability. That’s not failure. It’s a feature of human agency.

Unlike the weather, humans are reflexive. We respond to predictions. We adapt our behavior as our information and beliefs shift. This makes social forecasting not only harder, but even more ethically charged. It requires transparency about uncertainty, clarity about limitations, and care in how predictions are communicated and used.

Social science models will never be perfect. They will never fully capture the richness of human behavior. We don’t think that’s a flaw, and we don’t believe perfection should be the goal. What matters is usefulness. We believe language models, used thoughtfully, can become powerful tools for daily decision making, and more broadly, for helping us understand ourselves. Prediction in social science will never be complete. But it can improve, just as it did in meteorology.